The Unsexy Reality of Serverless AWS Cost & Reliability

Why this matters this week

AWS bills for Lambda, API Gateway, Step Functions, DynamoDB, SQS, EventBridge, Kinesis, CloudWatch, and “misc” just landed for the month. Teams are discovering:

- A “cheap” serverless migration quietly doubled their spend.

- P95 latency blew up after a seemingly harmless change to an event-driven workflow.

- An incident investigation stalled because nobody could trace a request across five serverless services.

This isn’t about shiny new AWS launches; it’s about a pattern that keeps repeating:

Teams adopt serverless to “simplify” infra, but re‑create distributed systems complexity—without the guardrails they had on EC2/EKS.

If you run production on AWS and care about reliability, cost efficiency, and observability, you need a more opinionated approach to serverless architecture, not more services.

What’s actually changed (not the press release)

None of this is “new,” but three shifts have turned into a critical mass:

-

Everything wants to be event-driven now

- SNS, SQS, EventBridge, Kinesis, DynamoDB Streams, S3 events, Step Functions — it’s trivial to glue services together.

- The friction to add yet another async hop is near zero.

- Result: Workflows that used to be a single HTTP call become 6+ hops across 4 services.

-

Pricing models reward scale… until they don’t

- Lambda’s free tier and per‑ms billing feel cheap early on.

- But at steady state, the cost curve is dominated by:

- Over-provisioned memory

- Chatty microservices over API Gateway

- Always-on “serverless” databases (e.g., RDS Aurora Serverless v2) for spiky, low-util workloads

- The tipping point where containers on Fargate or even EC2 are cheaper arrives sooner than many expect.

-

AWS has made reliability primitives better, but more fragmented

- Multi‑AZ, regional services, and improved retry/backoff defaults exist.

- But with each new service, you get a slightly different:

- Retry model

- Error surface

- Throttling behavior

- Building a system that degrades gracefully across those services is on you.

The net effect: The pain moved from “I have to patch hosts” to “I don’t understand how my own system behaves under load or failure.”

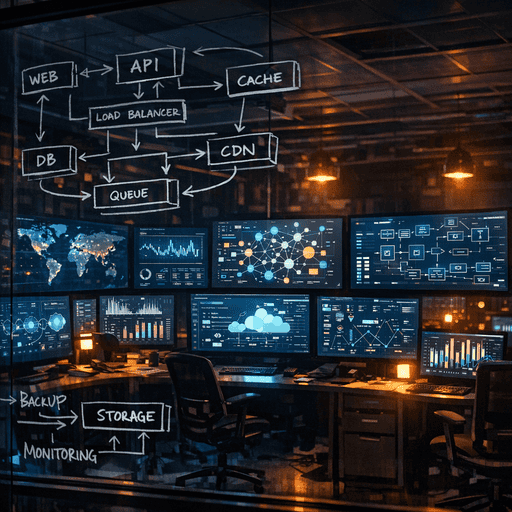

How it works (simple mental model)

Use this mental model to reason about AWS serverless and platform engineering choices:

1. Three planes: Compute, Data, Control

- Compute plane: Lambda, Fargate, ECS on EC2, Batch, Glue

Decide where code runs and in what failure domain. - Data plane: DynamoDB, Aurora, S3, SQS, Kinesis, Redis/ElastiCache

Decide where state lives and how consistency vs throughput trade off. - Control plane: EventBridge, Step Functions, API Gateway, AppConfig, IAM, CloudFormation, CDK

Decide how work is orchestrated, secured, and deployed.

Most messes happen when teams blur data plane vs control plane, e.g.:

- Use Step Functions as a database (long-lived state, high write churn).

- Use DynamoDB like a message queue.

- Use SQS as an event log with replay semantics.

2. Two primary failure modes: “Too coupled” vs “Too fragmented”

-

Too coupled (monolith energy)

- Single giant Lambda behind API Gateway, lots of branching logic.

- Shared database doing everything.

- Pros: Easy to reason about, fewer cross-service hops.

- Cons: Hard to change safely, painful cold starts, single blast radius.

-

Too fragmented (microservice hairball)

- Dozens of Lambdas, each tiny but chatty.

- Multiple queues/topics per feature.

- Pros: Team-level autonomy (in theory), localized changes.

- Cons: Hard to trace, surprising costs, complex failure modes.

You want “coarse-grained, well-bounded components”:

- Each component:

- Owns its data store.

- Has a small number of ingress/egress patterns.

- Can be run and tested in isolation.

3. Cost as a signal, not a trailing metric

For serverless, cost is often your first indicator of a design problem:

- High Lambda duration but low CPU: over‑allocated memory, I/O bound.

- High API Gateway cost vs Lambda: chatty service boundaries or N+1 call patterns.

- High DynamoDB RCU/WCU: non-optimized partition keys, hot keys, or using it as a queue.

Treat the bill as telemetry:

- Look for “unit economics per request” rather than total monthly spend.

- See which service is scaling faster than the workload itself.

Where teams get burned (failure modes + anti-patterns)

1. The “everything is async” fallacy

Pattern: A team moves from a synchronous REST API to an event-driven pipeline using EventBridge, SQS, and multiple Lambdas.

Issues:

- Latency balloons from 200 ms to several seconds+.

- Debugging requires jumping across 5 CloudWatch log groups.

- Retries at multiple layers cause duplicate processing.

This is often done to “decouple services,” but:

- Many business operations are inherently request/response.

- Async is great for fan-out and buffering, not for basic CRUD flows.

Mitigation:

Keep core user-facing flows synchronous where possible; introduce async only at natural boundaries (notifications, ETL, heavy background processing).

2. Observability added as an afterthought

Pattern: System is built using Lambda + API Gateway + SQS + DynamoDB, then later someone tries to “add tracing.”

Issues:

- No consistent correlation IDs across hops.

- Logs in different formats and locations.

- Partial traces because some services are not instrumented or sampling is misconfigured.

Result: During incidents, teams fall back to “grep CloudWatch logs and guess.”

Mitigation checklist:

- Enforce:

- A standard trace ID header (

x-correlation-idortraceparent) from edge. - Propagation through every function and queue message.

- A standard trace ID header (

- Use a single log format (JSON) and structured fields (

trace_id,request_id,tenant_id). - Decide up front:

- Which spans need 100% sampling.

- Where you can tolerate sampling vs full retention.

3. Lambda as a long-running worker

Pattern: Use Lambda for CPU-heavy or long-running jobs (e.g., video processing, large AI inference, big data transforms).

Issues:

- You hit the 15-minute max execution.

- Concurrency throttles other parts of the system.

- Hot-path cost is high compared to Fargate/EC2.

Mitigation:

- If execution is:

- > 5–10 minutes regularly, or

- Requires > 4–8 GB memory, or

- Needs GPU or specialized runtimes

→ Move to Fargate or ECS on EC2; use SQS or EventBridge as the trigger.

4. Over-abstracted “platform” with hidden AWS limits

Pattern: A platform engineering team builds a golden path: “You just push code and get a Lambda + API Gateway + DynamoDB combo.”

Issues:

- Tenants exceed Lambda concurrency quotas.

- SQS queues back up silently because dead letter queue (DLQ) wiring is missing or opaque.

- Engineers don’t know what to do when they hit throughput limits.

Example from a real org:

- A new feature triggered a large spike in S3 events → Lambda → DynamoDB writes.

- DynamoDB provisioned capacity was auto‑scaled, but Lambda concurrency was capped.

- Events piled up; S3 kept retrying; the backlog aged into stale data.

- There were dashboards, but nobody understood them.

Mitigation:

- Document the SLOs and limits of the platform as first-class artefacts:

- Max supported RPS per service by default.

- Concurrency caps and backpressure behavior.

- What “degraded mode” looks like and how it’s triggered.

- Give product teams visibility into infra metrics without needing to know all AWS service names.

Practical playbook (what to do in the next 7 days)

These steps assume you’re already on AWS with at least some serverless components.

Day 1–2: Map the critical paths

- Identify your top 3 revenue-critical flows (e.g., “place order,” “run report,” “send invoice”).

- For each, draw a service-level sequence diagram:

- Edge (ALB / CloudFront / API Gateway)

- All Lambdas / containers

- Queues, topics, streams

- Datastores

- Mark:

- Sync vs async hops

- Retries and DLQs

- Cross-region or cross-account calls

Deliverable: A single-page diagram per flow that a new engineer can understand in 5 minutes.

Day 3: Cost + latency sanity check

Using AWS Cost Explorer and service dashboards:

- Compute per-request cost approximations for each critical path:

(monthly cost for that path) / (monthly requests).

- Check basic latency:

- p50/p95 for entrypoint (API Gateway / ALB).

- Add estimated queue and function durations across hops.

Look for:

- p95 > 1s for simple API-like flows that could be synchronous end-to-end.

- Sudden cost cliffs in:

- API Gateway vs Lambda

- DynamoDB vs Lambda

- Data transfer between regions or AZs

Make a shortlist of 2–3 hotspots (places where cost or latency is clearly out of line with business value).

Day 4–5: Observability baseline

Implement or enforce:

- Correlation IDs:

- Ensure every ingress request gets a trace ID.

- Propagate across messages (put it in SQS message attributes, DynamoDB items, event payloads).

- Structured logging:

- Switch to JSON logs in Lambdas and containers.

- Required fields:

trace_id,service,function,environment,severity.

- Minimal tracing:

- Enable tracing at API Gateway / ALB.

- Instrument at least the critical path functions.

Goal: You can answer, for a single user request:

Which services did it touch, how long did each take, and where did it fail?

Day 6: One targeted refactor

Pick one hotspot from earlier and implement a small but clear improvement, such as:

- Replace a Lambda → API Gateway → Lambda chain with:

- Single Lambda doing both