Stop Hoarding Prompts: A Pragmatic Guide to AI Privacy, Retention, and Policy-as-Code

Why this matters this week

Over the last month, three patterns keep coming up in conversations with engineering leaders:

-

“Legal just froze our AI pilot.” Because nobody can answer simple questions like:

- Where does user data go?

- How long is it kept?

- Who can see it?

- Can we prove that?

-

SOC2 / ISO auditors are now explicitly asking about LLM usage. Not just: “Do you have a security policy?” but:

- How do you log AI access and decisions?

- How do you prevent sensitive data from leaving your boundary?

- How do you retire AI-related data on schedule?

-

Shadow AI is everywhere. Teams quietly wiring prompts into production flows, storing everything “for analytics later,” and assuming “the vendor is compliant so we’re fine.”

You’re probably already running:

– Chat-based internal tools,

– LLM-assisted customer support,

– Embedding-based search over internal docs.

If you don’t align those with your data retention, privacy, and governance posture now, you will eventually:

– Fail an audit,

– Block a key deployment,

– Or worse, leak data in a way that’s technically “within the vendor’s TOS” but completely outside your own risk appetite.

This is not about abstract “AI ethics.” It’s about:

– Contract risk,

– Regulatory exposure,

– And whether you can answer basic questions from a board or auditor without scrambling through Slack threads.

What’s actually changed (not the press release)

Three concrete shifts since early 2024 that matter to shipping teams:

-

Vendors added toggles; default behavior is still risky.

Many foundation model providers now offer:- “No training on your data”

- “Enterprise data isolation”

- Region pinning

But:

- These are often per-API-key or per-project, not global.

- SDK defaults may not match your contract (e.g., fallback to non-enterprise endpoints).

- Managed products (chat widgets, copilots) may still log full prompts for “quality improvement.”

So “we’re on the enterprise plan” ≠ “we’re aligned to our privacy policy.”

-

Regulators quietly tightened expectations.

Without quoting specific regs, the practical impact:- If you process personal data, your LLM integration is now in-scope for privacy and security controls (PIAs / DPIAs, records of processing).

- If you have SOC2 / ISO 27001, auditors expect AI to be governed like any other system:

- Asset inventory,

- Change management,

- Logging and monitoring,

- Data retention and deletion.

-

Model risk is now part of vendor risk.

Legal and security teams have started asking:- What training data does this model contain?

- Is there a risk of “memorized” secrets being regurgitated?

- How do we constrain and audit prompts to avoid policy violations?

That pulls model risk management into the same bucket as:

- Third-party SaaS review,

- Data processing agreements,

- Access review processes.

The net: AI is leaving “experiment land” and entering the same governance universe as your CRM, error tracking, and logs—but with messier data and worse defaults.

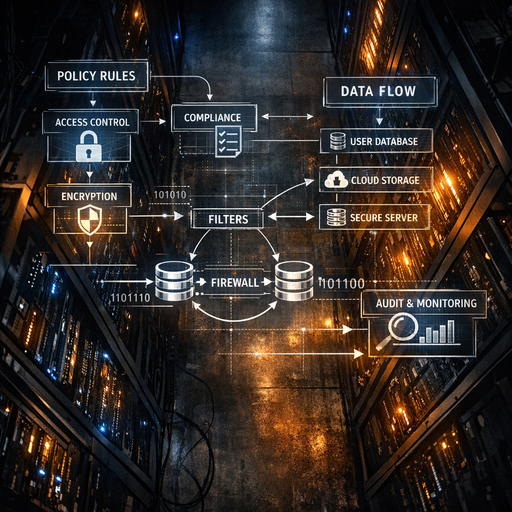

How it works (simple mental model)

Use a 4-layer model when thinking about privacy & governance for LLM systems:

-

Data at the edge (what you send)

- User prompts, context docs, logs, telemetry.

- Categories:

- Public / non-sensitive

- Internal but non-personal

- Personal data (PII / PHI / financial)

- Highly confidential (trade secrets, keys, internal strategies)

Key question: What categories are allowed to ever touch an external model?

-

Processing boundary (where the model runs)

- External multi-tenant API

- Vendor-hosted single-tenant environment

- Your own VPC with vendor-managed runtime

- Fully self-hosted model

Each step inward buys you:

- More control and auditability,

- Higher cost and operational burden.

This is the main axis of model risk and data residency control.

-

Persistence layer (what’s stored and for how long)

This has three distinct surfaces:- Vendor persistence:

- Request/response logs

- Training data ingestion

- “Quality improvement” datasets

- Your app’s persistence:

- Prompt/response logs (for debugging)

- Conversation history (for product features)

- Vector stores for retrieval-augmented generation

- Derived artifacts:

- Summaries, classifications, recommendations

- Fine-tuned models or LoRA adapters trained on your data

Each needs explicit retention and deletion rules.

- Vendor persistence:

-

Control plane (who can do what, and how we prove it)

- Access control:

- Which services / users can call which models.

- Policy:

- Rules on allowed inputs / outputs.

- Observability:

- Auditable logs of calls, key parameters, and outcomes.

- Enforcement:

- Policy-as-code: machine-readable rules in CI/CD and runtime.

- Access control:

If you can articulate these four clearly for each AI workload, you’re in decent shape for:

– SOC2 / ISO questions,

– Internal risk reviews,

– And incident investigations.

Where teams get burned (failure modes + anti-patterns)

1. Treating LLM calls like harmless utility APIs

Pattern:

– Logs are not classified as sensitive.

– Prompts include:

– Full customer records,

– Internal tickets with PII,

– Database dumps.

Result:

– Log stores and vendor logs quietly accumulate high-risk data with no retention limits.

– A future breach or subpoena exposes much more than anyone realized.

Anti-pattern indicators:

– “We just log the whole request for debugging.”

– “We’ll figure retention once we know what’s useful.”

2. “Enterprise plan” complacency

Pattern:

– Someone negotiated an enterprise LLM contract with decent privacy terms.

– Engineers still:

– Use default public endpoints,

– Mix personal accounts and corporate accounts,

– Use unofficial SDKs that hit consumer APIs.

Result:

– Your actual data flows don’t match the contract or your internal assurances.

Anti-pattern indicators:

– Multiple API keys scattered in different repos.

– No central registry of which services use which AI endpoints.

– Security team finds new LLM usage from network logs, not from you.

3. Forgetting that vector stores are databases

Pattern:

– For RAG, teams embed:

– Customer tickets

– Legal docs

– Internal strategy decks

– They drop embeddings and metadata into:

– Managed vector DB SaaS,

– Or a self-hosted instance with lax auth.

Result:

– Sensitive content is now in a new datastore:

– With unclear access control,

– No classification tags,

– Weak backup/restore governance.

Anti-pattern indicators:

– No written answer to: “What PII can be stored as embeddings?”

– No retention/deletion policy for the vector DB.

– Treating embeddings as “anonymous” by default.

4. Opaque “AI features” with no audit trail

Pattern:

– LLMs used for:

– Risk scoring,

– Routing decisions,

– Drafting communications sent to users.

- No logs of:

- What prompt was sent,

- What context was used,

- Which model/version produced a given output.

Result:

– When something goes wrong (e.g., biased decision or harmful content), there’s no audit path.

– Fails basic model risk and governance expectations.

Anti-pattern indicators:

– “We just call the LLM and trust the answer.”

– Logs show “task=classify_user” but not the actual inputs/outputs.

5. Governance by Google Doc

Pattern:

– There is a long “AI usage policy” document:

– Not enforced anywhere.

– Not referenced in code.

– Unknown to half the engineers.

Result:

– Shadow AI continues,

– Compliance teams are appeased on paper,

– Real risk posture is unchanged.

Anti-pattern indicators:

– Policies with no corresponding tests, guards, or CI checks.

– New AI services ship without any formal review process.

Practical playbook (what to do in the next 7 days)

You won’t implement perfect AI governance in a week, but you can build a concrete foundation.

1. Inventory first, argue later (1–2 days)

Create a lightweight AI system inventory:

- Ask in engineering channels + review code search:

- Which services call LLM or embedding APIs?

- Which vendors / endpoints are used?

- For each workload, capture:

- Service name & owner

- Vendor + endpoint

- Data categories involved (public/internal/PII/secrets)

- Where prompts/responses/embeddings are stored

- Whether there’s any retention logic

Put this in a simple spreadsheet or YAML; high signal, low ceremony.

2. Fix the most egregious data flows (1–2 days)

From that inventory, flag high-risk misalignments:

-

Any flows sending:

- Secrets (keys, passwords),

- Full customer records,

- Health / financial data

to external multi-tenant models.

-

Any flows storing full prompts with PII in:

- Log aggregators,

- Data lakes,

- Vector DBs

without retention or access controls.

For each high-risk case:

– Either:

– Strip/redact sensitive fields before the LLM call, or

– Move to a more controlled runtime (e.g., VPC-hosted model) with documented risk acceptance.

3. Establish basic data retention for AI artifacts (1 day)

You need concrete numbers, not vibes.

For:

– LLM request/response logs

– Conversation histories

– Vector embeddings and metadata

Define defaults (example only, not legal advice):

– Debug logs: 30 days, then delete.

– Non-critical embeddings: 180 days, then delete or re-index from source.

– User-visible conversation history: whatever aligns with your privacy policy (e.g., user-controlled deletion + max age).

Implement at least:

– Scheduled deletion jobs or built-in TTLs.

– A config file or code-level constant documenting those durations.

Document where vendor-side retention differs and what contract you rely on.

4. Introduce minimal policy-as-code for AI usage (1–2 days)

Start small, focusing on enforceable rules:

a) Static checks in CI

– Detect direct use of disallowed endpoints (e.g., consumer LLM APIs).

– Enforce that all AI calls go through a shared client library.

b