The AWS Serverless Tax: Where You’re Overpaying (and Breaking Reliability) Without Noticing

Why this matters this week

Multiple teams I’ve spoken with in the last month have quietly rolled back “fully serverless” stacks on AWS—not because serverless doesn’t work, but because:

- Costs were unpredictable and often 2–5x the EC2 equivalent.

- Latency SLOs were getting blown up by cold starts and fan-out patterns.

- Observability degraded to the point where “what happened?” took hours to answer.

- Platform teams couldn’t give developers a sane paved road across Lambda, Step Functions, EventBridge, DynamoDB, and API Gateway.

This is not a “serverless is bad” take. It’s: uncontrolled serverless is expensive and fragile, and many orgs are now paying a hidden “serverless tax”.

The good news: with some explicit patterns for cost, reliability, and observability, you can keep the good parts of serverless and stop the bleed.

What’s actually changed (not the press release)

In the last 12–18 months on AWS, the practical landscape for serverless & cloud engineering has shifted:

-

Workloads moved up the stack

- More “core” business systems (payments, orchestration, ETL, user-facing APIs) are now built on Lambda, Step Functions, EventBridge, DynamoDB, SQS, Kinesis.

- These are no longer sidecar “growth hacks”; they’re critical paths with uptime and latency SLOs.

-

Serverless pricing and knobs matured

- Lambda: tiered pricing, 1 ms billing granularity, and provisioned & SnapStart options.

- DynamoDB: auto-scaling and on-demand modes are more widely used, plus newish features (transactions, global tables) are now mainstream.

- Step Functions: Express vs Standard, and per-state vs duration pricing changes how you design workflows.

- This gives you more levers—but more ways to design yourself into a cost or reliability trap.

-

Platform engineering expectations went up

- Teams expect an internal platform: templates, golden paths, self-service, policy-as-code.

- Ad-hoc CDK/Serverless Framework repos per team with hand-rolled patterns no longer scales.

-

Observability has become the gating factor

- Distributed traces across Lambda, API Gateway, Step Functions, and EventBridge are no longer “nice to have”.

- Cold starts, retries, DLQs, and partial failures create non-obvious failure modes that require serious logging, tracing, and metrics.

In other words: the technology got more powerful, and the workloads more critical, but many teams are still using 2019-era patterns and mental models.

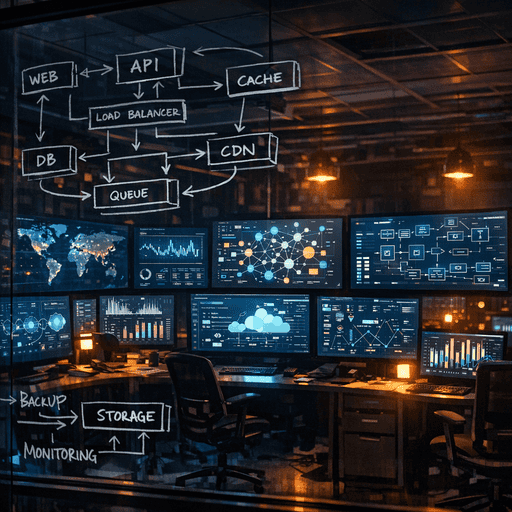

How it works (simple mental model)

Here’s a simple way to think about AWS serverless architecture that’s actually useful for decisions:

1. Three planes, not one monolith of “serverless”

Break your mental model into three planes:

- Compute plane: Lambda, Fargate, ECS on Fargate, sometimes Step Functions’ “glue” work.

- Data plane: DynamoDB, S3, RDS/Aurora Serverless, Kinesis, MSK, SQS.

- Control/event plane: EventBridge, Step Functions, SNS, API Gateway, AppSync.

Most pain comes from treating these as one blob of “event-driven microservices” instead of three planes with different failure and cost characteristics.

2. Cost vs latency vs durability triangle

For any given flow, you’re trading off among:

- Cost per request (includes idle + spiky load cost)

- Latency (P95/P99, including cold starts and network hops)

- Durability / ordering guarantees (exactly-once vs at-least-once, ordering, replay)

Roughly:

-

Lambda + EventBridge + Step Functions (Standard)

→ higher per-request overhead, good durability, good fan-out, higher tail latency. -

Lambda fronted by API Gateway, hitting DynamoDB

→ good cost for bursty workloads, but risk of Dynamo hot partitions + API Gateway cost explosion. -

Fargate services + SQS/Kinesis, writing to Aurora/Dynamo

→ lower overhead at scale, more predictable latency, more infra to manage.

3. “Line of stability” for your org

Every org has a practical line of stability:

- Above the line: services that must be boring, stable, and cost-predictable.

- Below the line: services where developer speed and elasticity matter most.

For workloads above the line (payments, auth, critical reporting), you’ll generally want:

- Fewer hops (less choreography)

- More static capacity (Fargate/ECS or provisioned capacity)

- Stricter schemas and contracts

For below-the-line workloads (experimental features, internal automations, async processing), Lambda + EventBridge + Step Functions is often ideal.

The mistake: putting everything above the line into maximal serverless fan-out architectures.

Where teams get burned (failure modes + anti-patterns)

1. “Lambda for everything” architecture

Symptoms:

- Hundreds of tiny functions orchestrated via EventBridge.

- No clear boundaries; lots of cross-region, cross-account hops.

- Hard to answer “what did this user action actually do?”

Why it hurts:

- Cost: Every hop (API Gateway, EventBridge, Lambda) has a per-invocation cost. You end up paying a tax for every internal call.

- Latency: Cold starts + network hops blow out P95 and P99.

- Debuggability: Failure domains are microscopic but poorly observable.

Better pattern:

- Use Lambda for edge and glue:

- Ingress/egress (APIs, webhooks, scheduled jobs).

- Lightweight enrichment, validation, and dispatch.

- Keep the core of high-throughput or critical workflows in:

- Fargate/ECS services, or

- Coarser-grained Lambdas triggered via SQS/SNS/Kinesis with clear ownership.

2. DynamoDB as “a JSON bucket with infinite RCU/WCU”

Symptoms:

- One giant multi-tenant table with generic “data” attributes.

- Hot partition keys (e.g.,

tenantId = 'default'for everything). - On-demand billing mode with surprise 5–10x cost spikes.

Why it hurts:

- Cost unpredictability: On-demand looks great at first, then a noisy neighbor or batch job lands and your bill jumps.

- Throttling under load: Without thought to key design and access patterns, you hit partition limits.

- Debugging pain: Semi-schema-less data structures make it hard to reason about queries and performance.

Better pattern:

- Design per-use-case tables with explicit access patterns.

- Use provisioned capacity with auto scaling for steady flows; on-demand for truly spiky, low-volume workloads.

- Explicit KPIs: cost per 1k requests, throttles per minute, hot-partition alarms.

3. Over-orchestrating with Step Functions

Symptoms:

- Giant workflow definitions with 50–100 states.

- Business logic embedded in ASL (Amazon States Language) rather than code.

- Cost shock when a high-throughput path runs through a Standard workflow.

Why it hurts:

- Per-state cost and state transition overhead accumulates fast.

- Deployment friction: complex workflows are harder to evolve safely.

- Debuggability: logic spread between code and workflow config.

Better pattern:

- Use Step Functions for:

- Long-running, human-in-the-loop, or external dependency-heavy workflows (e.g., KYC checks, provisioning flows).

- Coarse orchestration across a few major steps.

- Keep business rules in code, in services/Lambdas.

- For high-QPS flows: prefer simple, code-based orchestration with queues + idempotent handlers.

4. Observability as an afterthought

Symptoms:

- CloudWatch Logs only, no structured logging.

- No end-to-end trace IDs propagated across Lambda, API Gateway, and queues.

- Metrics exist, but no SLOs or alerts wired to them.

Why it hurts:

- Incident MTTR measured in hours, not minutes.

- Difficult cost attribution (who is burning Lambda or Dynamo budget?).

- “It times out sometimes; we don’t know why” becomes a recurring theme.

Better pattern:

- Standardized logging contract in a shared library:

- Correlation IDs, tenant/user IDs, request IDs, key event types.

- Tracing from the edge (API Gateway / ALB) through to downstream services.

- SLOs & alerts for:

- P95 latency for critical paths.

- Error rate per service.

- Queue age and DLQ volume.

- Cost per workload (tagging, Cost Explorer, or internal tools).

Practical playbook (what to do in the next 7 days)

Assuming you’re running production workloads on AWS with at least some serverless components, here is a focused 7-day plan.

Day 1–2: Inventory and identify your “expensive paths”

-

Map the top 3 critical user journeys

For each (e.g., “checkout”, “send campaign”, “upload report”):- Draw the architecture: API Gateway / ALB → Lambda / Fargate → queues → DBs → external APIs.

- Note which services are:

- Lambda

- Step Functions

- EventBridge

- DynamoDB / S3 / RDS

-

Pull cost & latency numbers per path

For each path, estimate:

- Requests per day / month.

- End-to-end P95 latency.

- Cost per 1k requests (Lambda, API Gateway, data transfer, DB operations).

It’s okay if the numbers are rough but explicit.

-

Flag obvious outliers

Look for:

- API Gateway or Lambda being > 40–50% of per-request cost.

- > 3–4 network hops for a single user-visible operation.

- Known cold start impacts on latency.

Day 3–4: Fix the worst serverless tax in one path

Pick a single path that’s both expensive and important. Apply one of these patterns:

-

API Gateway → Lambda → internal HTTP → Lambda

- Replace fan-out of many tiny internal Lambdas over HTTP with:

- A single coarser Lambda, or

- A small Fargate service behind an internal NLB.

- Goal: cut invocation counts and network hops.

- Replace fan-out of many tiny internal Lambdas over HTTP with:

-

Step Functions Standard for high-throughput short flows

- If you’re using Standard for short-lived, high-QPS flows:

- Evaluate Express workflows or replace with simple code + SQS.

- Goal: reduce per-state billing overhead.

- If you’re using Standard for short-lived, high-QPS flows:

-

DynamoDB on-demand where traffic is steady

- For tables with stable